Research

Research into METAVERSE...

Currently conducting research into aspects of the metaverse, VR, AR, and how to create meaningful experiences in our virtual lives. Currently working at mtion interactive inc.

Enhanced Videogame Livestreaming by Reconstructing an Interactive 3D Game View for Spectators [CHI 2022]

Authors: Jeremy Hartmann, Daniel Vogel

Abstract: Many videogame players livestream their gameplay so remote spectators can watch for enjoyment, fandom, and to learn strategies and techniques. Current approaches capture the player’s rendered RGB view of the game, and then encode and stream it as a 2D live video feed. We extend this basic concept by also capturing the depth buffer, camera pose, and projection matrix from the rendering pipeline of the videogame and package them all within a MPEG-4 media container. Combining these additional data streams with the RGB view, our system builds a real-time, cumulative 3D representation of the live game environment for spectators. This enables each spectator to individually control a personal game view in 3D. This means they can watch the game from multiple perspectives, enabling a new kind of videogame spectatorship experience.

Website: https://jjhartmann.github.io/EnhancedVideogameLivestreaming/

ACM: https://dl.acm.org/doi/10.1145/3491102.3517521

Merging the Real and the Virtual: An Exploration of Interaction Methods to Blend Realities [THESIS]

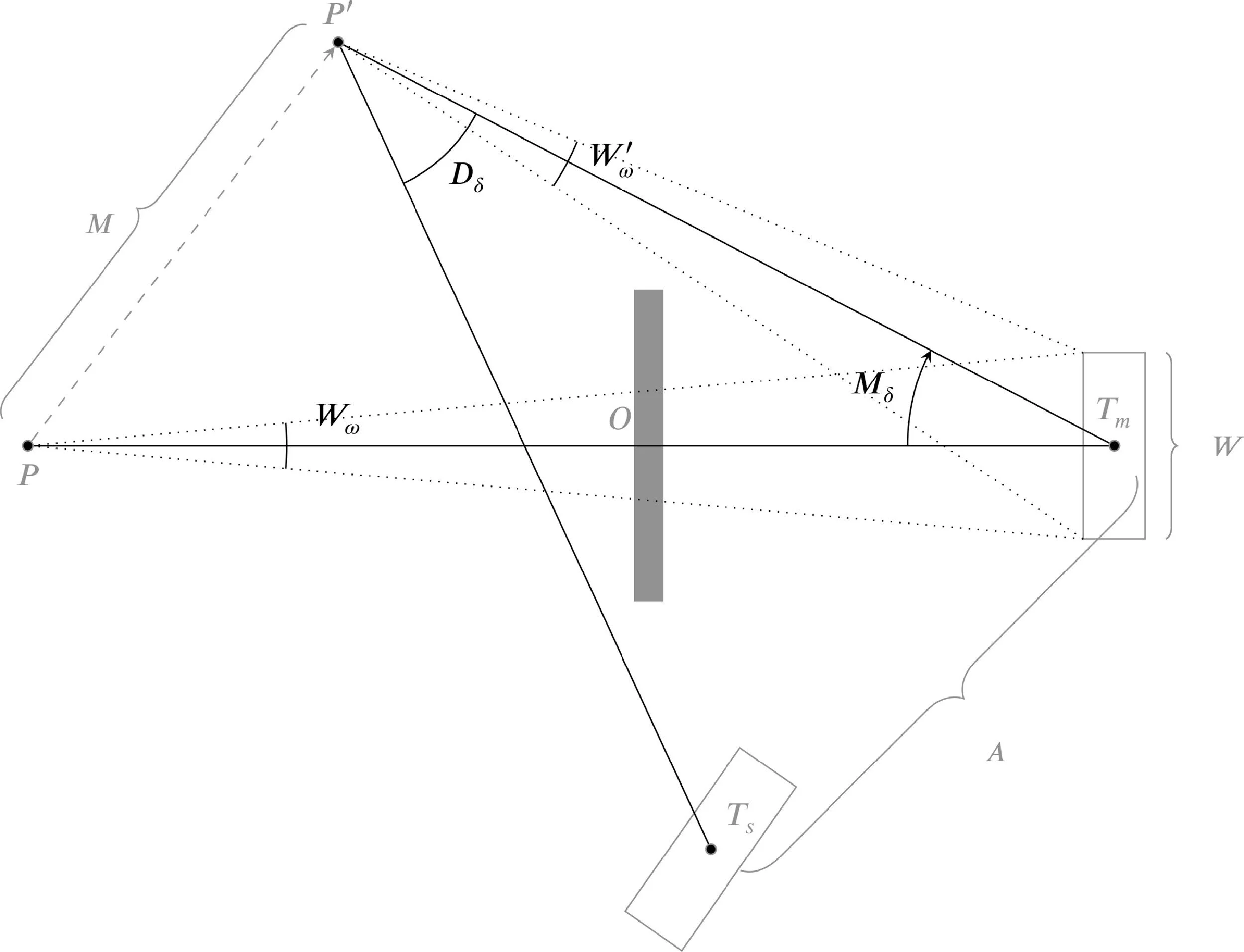

The Virtuality Continuum (modifed from Milgram et al. 1995)

Authors: Jeremy Hartmann

Abstract: We investigate, build, and design interaction methods to merge the real with the virtual. An initial investigation looks at spatial augmented reality (SAR) and its effects on pointing with a real mobile phone. A study reveals a set of trade-offs between the raycast, viewport, and direct pointing techniques. To further investigate the manipulation of virtual content within a SAR environment, we design an interaction technique that utilizes the distance that a user holds mobile phone away from their body. Our technique enables pushing virtual content from a mobile phone to an external SAR environment, interact with that content, rotate-scale-translate it, and pull the content back into the mobile phone. This is all done in a way that ensures seamless transitions between the real environment of the mobile phone and the virtual SAR environment. To investigate the issues that occur when the physical environment is hidden by a fully immersive virtual reality (VR) HMD, we design and investigate a system that merges a realtime 3D reconstruction of the real world with a virtual environment. This allows users to freely move, manipulate, observe, and communicate with people and objects situated in their physical reality without losing their sense of immersion or presence inside a virtual world. A study with VR users demonstrates the affordances provided by the system and how it can be used to enhance current VR experiences. We then move to AR, to investigate the limitations of optical see-through HMDs and the problem of communicating the internal state of the virtual world with unaugmented users. To address these issues and enable new ways to visualize, manipulate, and share virtual content, we propose a system that combines a wearable SAR projector. Demonstrations showcase ways to utilize the projected and head-mounted displays together, such as expanding field of view, distributing content across depth surfaces, and enabling bystander collaboration. We then turn to videogames to investigate how spectatorship of these virtual environments can be enhanced through expanded video rendering techniques. We extract and combine additional data to form a cumulative 3D representation of the live game environment for spectators, which enables each spectator to individually control a personal view into the stream while in VR. A study shows that users prefer spectating in VR when compared with a comparable desktop rendering.

OctoPocus in VR: Using a Dynamic Guide for 3D Mid-Air Gestures in Virtual Reality [TVCG 2021]

Authors: Katherine Fennedy, Jeremy Hartmann, Quentin Roy, Simon T. Perrault, and Daniel Vogel

Abstract: Bau and Mackay's OctoPocus dynamic guide helps novices learn, execute, and remember 2D surface gestures. We adapt OctoPocus to 3D mid-air gestures in Virtual Reality (VR) using an optimization-based recognizer, and by introducing an optional exploration mode to help visualize the spatial complexity of guides in a 3D gesture set. A replication of the original experiment protocol is used to compare OctoPocus in VR with a VR implementation of a crib-sheet. Results show that despite requiring 0.9s more reaction time than crib-sheet, OctoPocus enables participants to execute gestures 1.8s faster with 13.8% more accuracy during training, while remembering a comparable number of gestures. Subjective ratings support these results, 75% of participants found OctoPocus easier to learn and 83% found it more accurate. We contribute an implementation and empirical evidence demonstrating that an adaptation of the OctoPocus guide to VR is feasible and beneficial.

An examination of mobile phone pointing in surface mapped spatial augmented reality [IJHCS 2021]

Authors: Jeremy Hartmann and Daniel Vogel

Paper: We investigate mobile phone pointing in Spatial Augmented Reality (SAR), where digital content is mapped onto the surfaces of a real physical environment. Three pointing techniques are compared: raycast, viewport, and direct. A first experiment examines these techniques in a realistic five-projector SAR environment with representative targets distributed across different surfaces. Participants were permitted free movement, so variations in target occlusion and target view angle occurred naturally. A second experiment validates and further generalizes findings by strictly controlling target occlusion and view angle in a simulated SAR pointing task using an AR HMD. Overall, results show raycast is fastest for non-occluded targets, direct is most accurate, and fastest for occluded targets in close proximity, and viewport falls in between. Using the experiment data, we formulate and evaluate a new Fitts’ model combining two spatial configurations in a SAR pointing task to capture key characteristics, initial target occlusion, target view angle, and user movement. Analysis shows it is a better predictor than previous models.

Website: https://www.sciencedirect.com/science/article/abs/pii/S107158192100080X

Links: PDF

Same Place, Different Space: Designing for Differing Physical Spaces in Social Virtual Reality [SOCIAL VR]

Authors: Johann Wentzel, Daekun Kim, Jeremy Hartmann

Abstract: How do you design a physically-accurate social VR space for multiple users when one has a small office and the other has a gymnasium? We discuss the trade-offs and challenges for designing physically-accurate VR spaces for multiple users via the physical-virtual spectrum, and discuss possible approaches for resolving the conflicts between VR users' varying physical environments. We provide initial discussion and directions for future research.

Website: https://johannwentzel.ca/projects/social-vr-CHI21/

AAR: AUGMENTING A WEARABLE AUGMENTED REALITY DISPLAY WITH AN ACTUATED HEAD-MOUNTED PROJECTOR [UIST 2020]

Authors: Jeremy Hartmann, Yen-Ting Yeh, and Daniel Vogel

Abstract: Current wearable AR devices create an isolated experience with a limited field of view, vergence-accommodation conflict, and difficulty communicating the virtual environment to observers. To address these issues and enable new ways to visualize, manipulate, and share virtual content, we introduce Augmented Augmented Reality (AAR) by combining a wearable AR display with a wearable spatial augmented reality projector. To explore this idea, a system is constructed to combine a head-mounted actuated pico projector with a Hololens AR headset. Projector calibration uses a modified structure from motion pipeline to reconstruct the geometric structure of the pan-tilt actuator axes and offsets. A toolkit encapsulates a set of high-level functionality to manage content placement relative to each augmented display and the physical environment. Demonstrations showcase ways to utilize the projected and head-mounted displays together, such as expanding field of view, distributing content across depth surfaces, and enabling bystander collaboration.

VIEW-DEPENDENT EFFECTS FOR 360° VIRTUAL REALITY VIDEO [UIST 2020]

Authors: Jeremy Hartmann, Stephen DiVerdi, Cuong Nguyen, and Daniel Vogel

Abstract: “View-dependent effects” have parameters that change with the user’s view and are rendered dynamically at runtime. They can be used to simulate physical phenomena such as exposure adaptation, as well as for dramatic purposes such as vignettes. We present a technique for adding view-dependent effects to 360 degree video, by interpolating spatial keyframes across an equirectangular video to control effect parameters during play- back. An in-headset authoring tool is used to configure effect parameters and set keyframe positions. We evaluate the utility of view-dependent effects with expert 360 degree filmmakers and the perception of the effects with a general audience. Results show that experts find view-dependent effects desirable for their creative purposes and that these effects can evoke novel experiences in an audience.

Website: https://jjhartmann.github.io/ViewDependentEffects/

EXTEND, PUSH, PULL: SMARTPHONE MEDIATED INTERACTION IN SPATIAL AUGMENTED REALITY VIA INTUITIVE MODE SWITCHING [SUI 2020]

Authors: Jeremy Hartmann, Aakar Gupta, and Daniel Vogel

Abstract: We investigate how smartphones can be used to mediate the manipulation of smartphone-based content in spatial augmented reality (SAR). A major challenge here is in seamlessly transitioning a phone between its use as a smartphone to its use as a controller for SAR. Most users are familiar with hand extension as a way for using a remote control for SAR. We therefore propose to use hand extension as an intuitive mode switching mechanism for switching back and forth between the mobile interaction mode and the spatial interaction mode. Based on this intuitive mode switch, our technique enables the user to push smartphone content to an external SAR environment, interact with the external content, rotate-scale-translate it, and pull the content back into the smartphone, all the while ensuring no conflict between mobile interaction and spatial interaction. To ensure feasibility of hand extension as mode switch, we evaluate the classification of extended and retracted states of the smartphone based on the phone’s relative 3D position with respect to the user’s head while varying user postures, surface distances, and target locations. Our results show that a random forest classifier can classify the extended and retracted states with a 96% accuracy on average.

Website: https://jjhartmann.github.io/ExtendPushPull/

RealityCheck: Blending Virtual Environments with Situated Physical Reality [CHI 2019]

Authors: Jeremy Hartmann, Christian Holz, Eyal Ofek, and Andrew D. Wilson

Abstract: Today's virtual reality (VR) systems offer chaperone rendering techniques that prevent the user from colliding with physical objects. Without a detailed geometric model of the physical world, these techniques offer limited possibility for more advanced compositing between the real world and the virtual. We explore this using a realtime 3D reconstruction of the real world that can be combined with a virtual environment. RealityCheck allows users to freely move, manipulate, observe, and communicate with people and objects situated in their physical space without losing the sense of immersion or presence inside their virtual world. We demonstrate RealityCheck with seven existing VR titles, and describe compositing approaches that address the potential conflicts when rendering the real world and a virtual environment together. A study with frequent VR users demonstrate the affordances provided by our system and how it can be used to enhance current VR experiences.

Links: PDF ACM

An Evaluation of Mobile Phone Pointing in Spatial Augmented Reality [CHI 2018]

Authors: Jeremy Hartmann and Daniel Vogel

Abstract: We investigate mobile phone pointing in Spatial Augmented Reality (SAR). Three pointing methods are compared, raycasting, viewport, and direct contact ("tangible''), using a five-projector "full'' SAR environment with targets distributed on varying surfaces. Participants were permitted free movement in the environment to create realistic variations in target occlusion and target incident angle. Our results show raycast is fastest for high and distant targets, tangible is fastest for targets within close proximity to the user, and viewport performance is in-between.

Links: PDF POSTER

Using Conformity to Probe Interaction Challenges in XR Collaboration [CHI 2018]

Authors: Jeremy Hartmann, Hemant Surale, Aakar Gupta, and Daniel Vogel

Abstract: The concept of a conformity spectrum is introduced to describe the degree to which virtualization adheres to real world physical characteristics surrounding the user. This is then used to examine interaction challenges when collaborating across different levels of virtuality and conformity. PDF ARXIV